Today’s media landscape is a chaotic landscape of misinformation where communities of people are believing opinions over facts. As The Ranger staff, we believe it is important to identify that social media have become a dangerous breeding ground of misinformation, creating echo chambers that reinforce rhetoric regardless of how harmful it is to the rest of the population.

Algorithms on platforms like Facebook, TikTok or X prioritize reactions over honesty which spreads misinformation faster than the truth can. In a 2018 MIT study, it was found that false news on X spread “significantly farther, faster, deeper, and more broadly” largely because misinformation triggers a stronger emotional response.

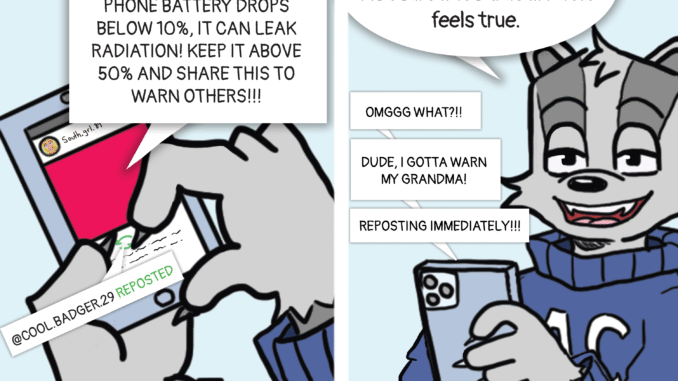

Once users are engrained into an echo chamber it becomes challenging to change their minds. According to study from Pew Research Center, 53% of adults in the U.S. regularly get their news from social media but most do not trust how accurate the information is. Yet because they are exposed to misleading information, social media users are falling into what is called by psychologists the “illusory

truth effect” where repetitive exposure to misinformation transforms into perceived fact.

The consequences of these echo chambers aren’t just hypothetical but have real world impact. During the COVID-19 pandemic, the World Health Organization warned of an “infodemic” where medical misinformation spread just as fast as the virus did. False claims about the vaccine, what treatments to use, and where the virus came from reached millions online, directly undermining the efforts of public health officials. The direct result of this costing many lives and suppressing medical progress.

Politics do not escape from the damages either. According to a study done by the University of Oxford’s Computational Propaganda Project, coordinated misinformation campaigns have been used to manipulate public opinions and elections in roughly eighty one different countries globally. In our homeland during the last few election cycles we saw misinformation create polarizing politics and extremist stances.

Research from Rice University, Carnegie Mellon University and MIT showed that even when people knew the information may be false, if it aligned with their belief system they would just accept it as truth. Nothing is a better example than during the Trump campaigns of a “Deep State” that aligned with right leaning voters ideals and opinions, even when the after effects of trumps win did not out any such “deep state” since it did not exist.

The problem of misinformation is an invisible force that is hard to combat. Algorithms tailor our feeds and show us what we already agree with without providing outside information, thereby reinforcing our beliefs and isolating each individual from outside perspective. When outside information is brought to an individual’s attention it is dismissed as fake news. Social media has the potential to create connections, teach each of us to be better humans and create a space for learning but only if we can learn to recognize what is at stake.

The fight to stop misinformation requires better content moderation, transparency on how algorithms work and improved digital literacy for all ages. When truth becomes subjective, and facts become optional, we all lose.

Leave a Reply